|

Jagennath Hari I'm a software engineer at Omni Instrument in San Francisco, where I work on computer vision and robotics for manufacturing. Prevoiusly at L&A I've worked on L&Aser™ and AgCeption™. I did my Masters at New York University, where I was advised by Farshad Khorrami. I like to build robotic systems. |

|

ResearchI'm interested in computer vision, robotics and SLAM. I focus my research about scene understanding, HD maps and 3D reconstruction using different sensors (camera, LiDAR, RADAR, IMU, GPS, etc). |

|

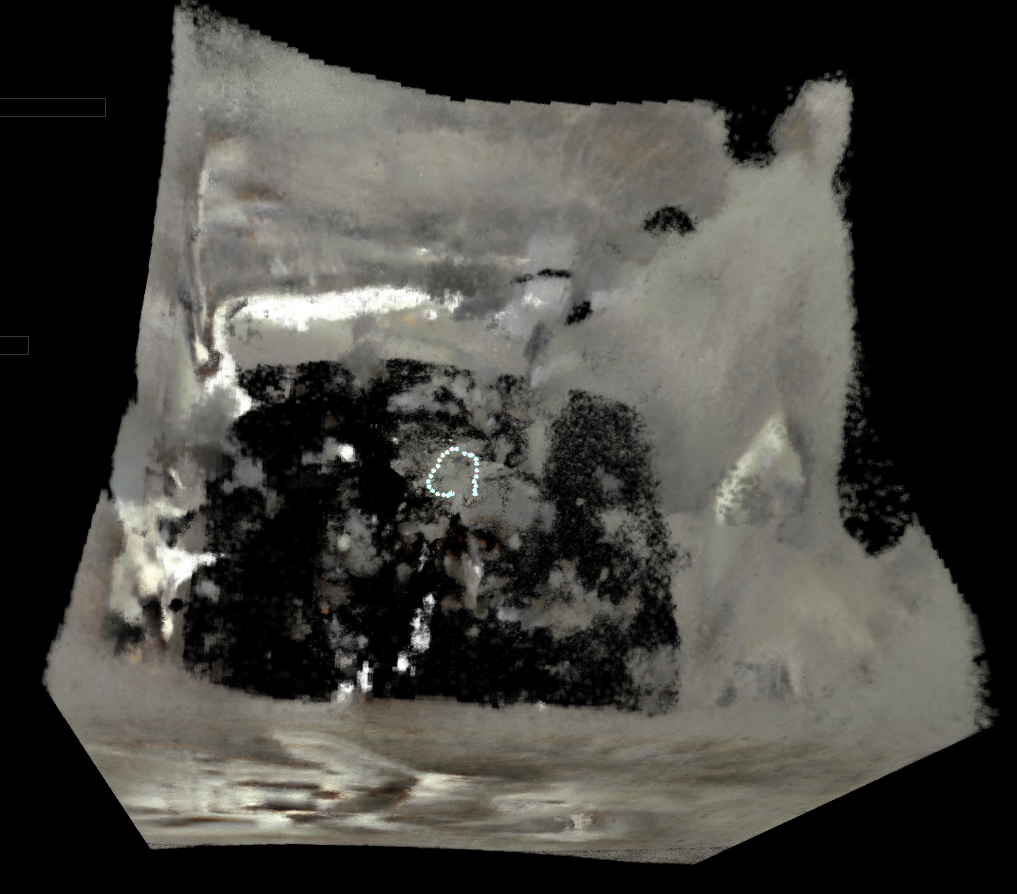

Singularity3D: World Synthesis with Spherical SfM and Feature 3DGS

J. Hari Github, 2025 Project Page Single-image world synthesis using a generative panorama prior, orbit-conditioned spherical SfM, and Semantic 3D Gaussian scene reconstruction. |

|

SpatialFusion-LM: Foundational Vision Meets SpatialLM

J. Hari Github, 2025 Project Page SpatialFusion-LM is a unified framework for spatial 3D scene understanding from monocular or stereo RGB input. It integrates depth estimation, differentiable 3D reconstruction, and spatial layout prediction using large language models. |

|

|

Edge Optimized Tracking System

J. Hari Github, 2024 Project Page The Edge Optimized Tracking System is a real-time object tracking pipeline that combines deep learning, tracking algorithms, and filtering techniques to accurately detect and follow objects. Designed for high-speed applications, it ensures efficient and reliable performance on edge devices. |

|

Floor Plan Based Active Global Localization and Navigation Aid for Persons With Blindness and Low Vision

R. G. Goswami, H. Sinha, P. V. Amith, J. Hari, P. Krishnamurthy, J. Rizzo, F. Khorrami IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025 IEEE Robotics and Automation Letters (RA-L), 2024 Project Page / Video / IEEE Explore® A real-time navigation method that uses floor plans and sensor data to help individuals with blindness or low vision explore and localize themselves indoors, without prior training or known starting position. |

|

RGBD-3DGS-SLAM

J. Hari Github, 2024 Project Page RGBD-3DGS-SLAM is a SLAM system that combines 3D Gaussian Splatting with monocular depth estimation for accurate point cloud and visual odometry. It uses UniDepthV2 to infer depth and intrinsics from RGB images, while optionally incorporating depth maps and camera info for improved results. |

|

CUDA Accelerated Visual Inertial Odometry Fusion

J. Hari Github, 2024 Project Page A real-time system that fuses camera and motion sensor data using the Unscented Kalman Filter. It enables accurate and efficient tracking of a robot’s movement, supporting reliable navigation in autonomous systems. |

|

DepthStream Accelerator

J. Hari Github, 2023 Project Page A high-performance monocular depth estimation system designed for real-time use in robotics, autonomous navigation, and 3D perception. It delivers fast and accurate depth predictions, making it well-suited for applications requiring low-latency visual understanding. |

|

FusionSLAM: Unifying Instant NGP for Monocular SLAM

J. Hari Github, 2023 Project Page FusionSLAM combines feature matching, depth estimation, and neural scene representation to improve mapping and localization using just a single camera. This approach enhances the accuracy and efficiency of monocular SLAM in real-world environments. |

|

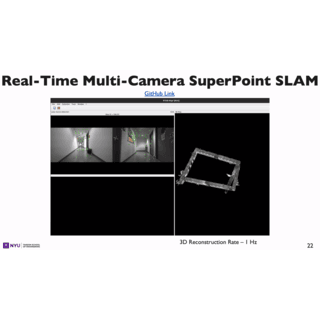

Real-Time Multi Camera Superpoint SLAM

J. Hari Github, 2023 Project Page A graph-based SLAM system that fuses visual data from multiple cameras using learned feature points for robust mapping and localization. Designed for accurate 3D reconstruction and real-time operation in complex environments. |

|

Creating a Resilient SLAM System for Next-Gen Autonomous Robots

J. Hari New York University Tandon School of Engineering ProQuest Dissertations & Theses, 2023 Video / Paper Combines machine learning with SLAM to help robots build more accurate maps and navigate better. Introduces bi-directional loop closure to improve reliability when revisiting places. |

|

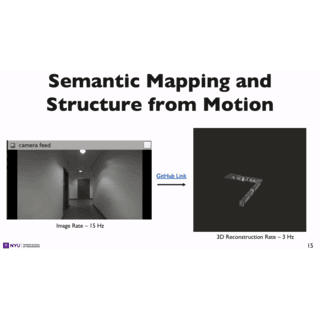

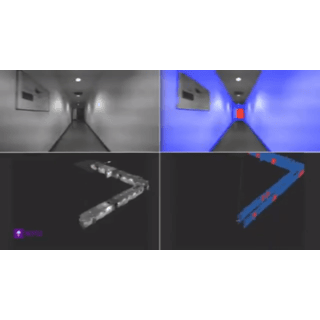

Efficient Real-Time Localization in Prior Indoor Maps Using Semantic SLAM

R. G. Goswami, P. V. Amith, J. Hari, A. Dhaygude, P. Krishnamurthy, J. Rizzo, F. Khorrami International Conference on Automation, Robotics and Applications (ICARA), 2023 Video / IEEE Explore® A method for determining precise indoor location using floor plan images and real-time sensor data from RGB-D and IMU, without relying on GPS. The approach aligns a live semantic map with the architectural layout to enable accurate global localization. |

Other Contributions |

|

Design and source code from Jon Barron's website. |